How to setup a Varnish cache server

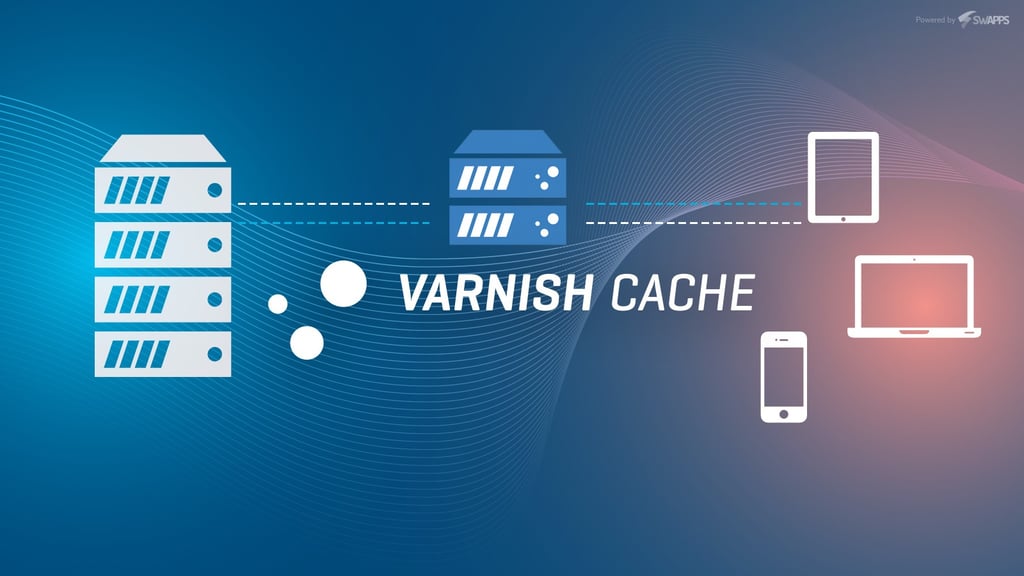

Websites with high traffic need to serve the same content several times to different users. Depending on your application, It can be really expensive (resources talking) to process all the application logic every time a user requests web page. Here is where server caching comes, you can just a save temporary copy of the content in memory and serve this content to all the users.

Varnish is great to cache content on the server side. Essentially you should cache HTML content but you can also cache files: CSS, JS, images, documents.

It sounds good, but the truth is by default Varnish does nothing, or at least you could be wasting the advantages of this piece of software, and the documentation does not help, so I have written this article, so you can get the most benefit from Varnish. I’m going to explain where and how to configure, test and deploy a Varnish cache server for your application.

For demonstration purposes, let’s say we have 2 server instances for our app and cache servers with the following local IP addresses:

- App Server: 192.168.1.2

- Cache Server: 192.168.1.3

Install Varnish Cache Server

For this articles purpose, we are going to install an Ubuntu server 16.04 with Varnish 4.0. To install Varnish, you just need to run:

sudo apt install varnish

I will install Varnish 4.0, and from now on you will be paying special attention to 2 specific files:/etc/default/varnishand /etc/varnish/default.vcl

Backend configuration

The first thing you need to do is to configure the backend or instruct Varnish where the web application will live:

- What is the hostname or IP address?

- What is the port?

To define that you need to go and update the file /etc/varnish/default.vcland find the following section, that will be configured for our example purpose like this:

backend default {

.host = "192.168.1.2";

.port = "8080";

.first_byte_timeout = 60s;

.connect_timeout = 300s;

}

It will instruct Varnish to listen the application running at IP 192.168.1.2 and port 8080.

Configure the Varnish daemon

The first thing you need to define where Varnish will run. We are going to leave it running on the default port 6081. It’s very common to run this daemon on port 80 and 443 for SSL, but we prefer to put NGINX in the front and leave it to attend the traffic.

Regarding the memory, a Varnish blank installation will run with 256MB of memory, that could be enough for some applications, but for high traffic apps, It might not be enough, and more if you have reserved a dedicated server for cache only.

You can change that at:

/etc/default/varnish

Find the following section:

DAEMON_OPTS="-a :6081 \ -T localhost:6082 \ -f /etc/varnish/default.vcl \ -S /etc/varnish/secret \ -s malloc,256m"

To update the amount of RAM, you change the last line where It says 256m and update for the required value, on my case, I want to dedicate 3GB of RAM to Varnish, so the block will look like:

DAEMON_OPTS="-a :6081 \ -T localhost:6082 \ -f /etc/varnish/default.vcl \ -S /etc/varnish/secret \ -s malloc,3G"

Validate It’s running with the right configuration

Confirm that It’s running as expected, check the process ps aux | grep varnishand you should see something like:

/usr/sbin/varnishd -j unix,user=vcache -F -a :6081 -T :6082 -f /etc/varnish/default.vcl -S /etc/varnish/secret -s malloc,3G

Fix Varnish startup daemon in some Ubuntu installations

We have detected a bug where the service is not following instructions defined at varnish file and you might require to edit the startup service.

To do that open and edit the file

/lib/systemd/system/varnish.service

and you will see something like this:

[Unit] Description=Varnish HTTP accelerator Documentation=https://www.varnish-cache.org/docs/4.1/ man:varnishd [Service] Type=simple LimitNOFILE=131072 LimitMEMLOCK=infinity ExecStart=/usr/sbin/varnishd -j unix,user=vcache -F -a :6081 -T :6082 -f /etc/varnish/default.vcl -S /etc/varnish/secret -s malloc,256M ExecReload=/usr/share/varnish/reload-vcl ProtectSystem=full ProtectHome=true PrivateTmp=true PrivateDevices=true [Install] WantedBy=multi-user.target

In order to make it work, you will need to update the section inside the line ExecStart and replace it for the required configuration:

ExecStart=/usr/sbin/varnishd -j unix,user=vcache -F -a :6081 -T :6082 -f /etc/varnish/default.vcl -S /etc/varnish/secret -s malloc,3G

After you are done with that, you need to reload the service daemon: systemctl daemon-reload and then restart Varnish.

How to configure to PURGE Cache

There are 2 ways to clear the Varnish cache:

- Restart Varnish service.

- Send a PURGE request to Varnish server.

Restart Varnish It’s just about restarting the service:

sudo service varnish restart

But what we really need is to be able to send a PURGE request from the application server. It can be achieved by instructing the server to purge a specific PATH or all of them. By using CURL, the request would look like:

curl -X PURGE http://192.168.1.3:6181

By default Varnish won’t allow a PURGE request from an external server, so you need to allow requests from the application server. To do so, go and edit /etc/varnish/default.vcland find the purge section where you need to add the app server IP address:

acl purge {

"localhost";

"127.0.0.1";

"192.168.1.2"/24;

}

How to debug the PURGE

You will need to validate that everything is working correctly. For doing that, you can use the following command:

varnishlog -g request -q 'ReqMethod eq "PURGE"'

Then you can send a PURGE request and you should see something like this to confirm that PURGE request was received:

* << Request >> 1179851 - Begin req 1179850 rxreq - ReqStart 192.168.195.197 39700 - ReqMethod PURGE - ReqURL /.* - ReqProtocol HTTP/1.1 - ReqHeader Host: swapps.com - ReqHeader User-Agent: W3 Total Cache - ReqHeader Connection: close - ReqHeader X-Forwarded-For: 192.168.195.197 - VCL_call RECV - Timestamp Process: 1531199642.768541 0.000094 0.000094 - RespHeader Date: Tue, 10 Jul 2018 05:14:02 GMT - RespHeader Server: Varnish - RespHeader X-Varnish: 1179851 - RespProtocol HTTP/1.1 - RespStatus 200 - RespReason OK - RespReason Purged - End

A 200 OK status means everything went good and Varnish has cleared the cache for the requested URL, and you should have everything you need to start caching content on your server.

The next step if you haven’t done is to configure the rules of what content you want to cache and which not, but that’s topic for another blog post and It will highly depend on the type of application, framework or CMS used.