To keep your infrastructure under control, one of the most important tools is the monitoring. You need to know what’s the status of your servers, services and applications.

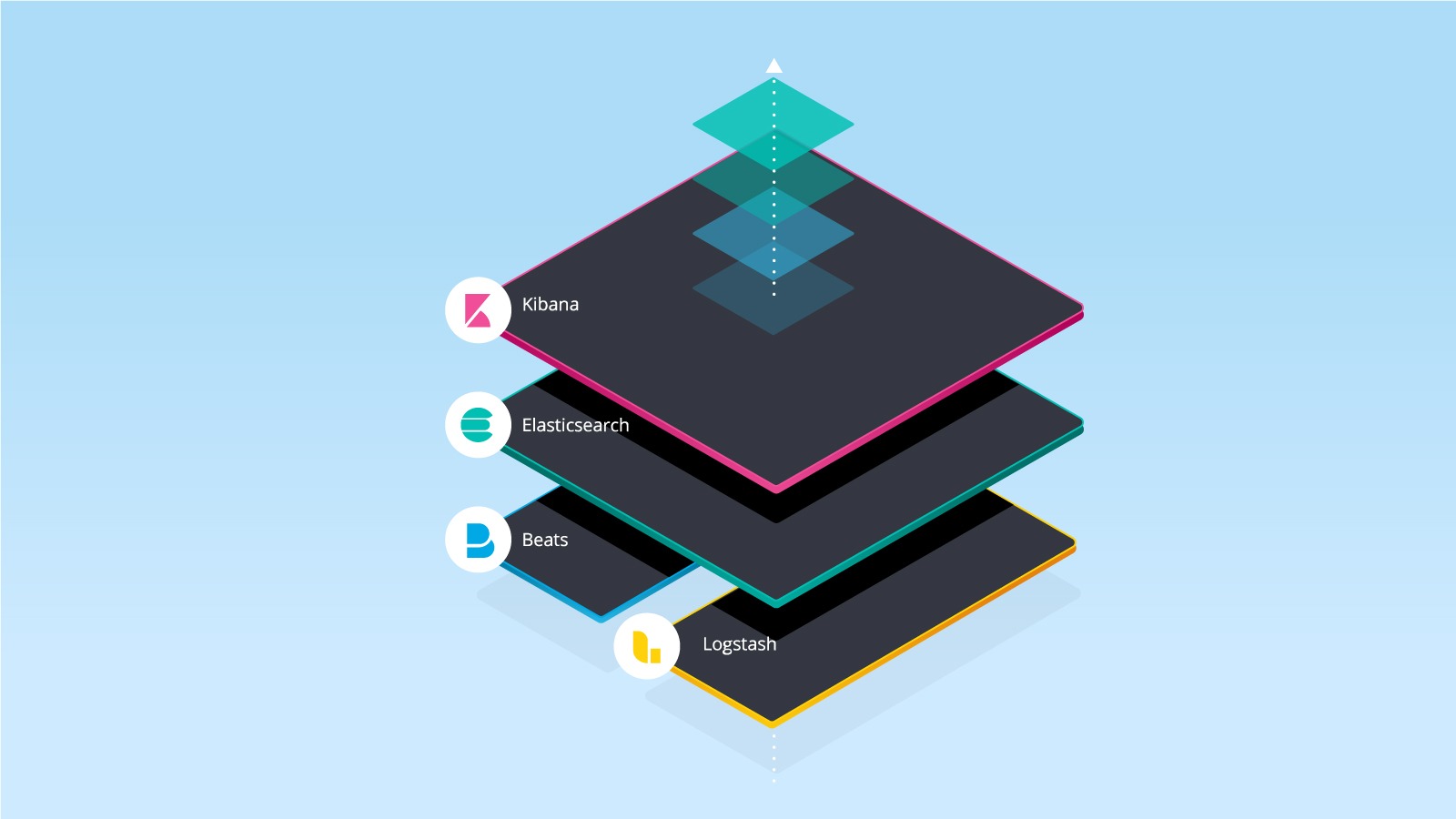

There are several challenges to this. There are some very interesting solutions, like the ones proposed by Netdata or NewRelic. I wanted to try another tool that I recently saw in action: Elastic Stack (known as ELK). This stack have several layers, and has a powerful search engine at its core: ElasticSearch.

We have at the very bottom, the beats. The official documentation describes them as Lightweight data Shippers. They are client applications living in our servers and that send data periodically to elasticsearch. We have beats for measuring metrics, extracting logs, checking availability of websites, etc.

At the next layer, the heart of the stack: the search engine. All the data goes in there and is indexed, for later consumption. Every beat gets its index. You can aggregate or explore data easily and quickly because the full power of the search engine is there for you to use.

At the outer layer, the closest to the user, you can find Kibana. This is a visualization tool. The official site describes it as your window into the stack.

In here, you can have several graphs, dashboards and information of interest. Also, you can filter it as desired using the underlying search engine.

What’s the best of all this? The whole stack is open source. You can download it and install it. You can setup the whole system on your own and integrate it into your infrastructure. There are some paid bits (alerts, for example), but the most essential bits are there.

You can also have the SaaS offering: ElasticCloud. They provide a 14 days trial and a simple interface to set up your clusters. You don’t have much flexibility during the trial, but once you move to a paid version you can play with your infrastructure just by moving some slides. They are hosted on amazon or google cloud, so the instances are pretty reliable.

Now, into the implementation. I installed filebeat and metricbeat. These beats allow me to have a lot of information about my infrastructure.

Filebeat extracts the logs from the enabled modules (system, nginx, etc) and send them to ElasticSearch. Kibana has a feed, in realtime, of all the logs in the whole infrastructure, and extracts relevant information from there. Kibana also has some dashboards that display important details like how frequent are the users trying to use sudo, or what usernames are being used to attempt logins to the server.

Metricbeat sends metrics to elasticsearch. Those metrics are the commonly used: cpu, memory, disk, etc. Some other metrics can be enabled and configured. With this data in kibana, you can have your whole infrastructure in the same screen, at a glance. If a server goes beyond some limits for a given metric, it will be highlighted.

Obviously you can check the detail of every hosts, the historic values and refresh them on real time.

There are many more beats and modules that you can integrate into the stack, and it is easy to automate the integration of the beats into your servers.

Give it a try. It is very flexible and easy to setup!